Archive for the 'Agile' Category

No Frameworks, Part II

Sunday, September 24th, 2017True. So *assuming* frameworks are necessary takes away a valuable option.

— Matteo Vaccari (@xpmatteo) September 24, 2017

Context is king

Engineering is about making tradeoffs. It is about delivering useful solutions at an acceptable cost. As Carlo Pescio likes to say, a construction engineer knows the properties of materials; she knows when to use steel, concrete, glass or bricks. Every material has particular properties that make it more or less appropriate for achieving a desired effect.

In the world of software, application frameworks are a broad category of “construction materials” for application developers. What are, generally speaking, their properties? In which contexts are they useful or harmful? What kind of results are made easier, or more difficult, by using frameworks?

These are all relevant questions, IF you have a choice of using frameworks or not. All too often, developers assume that frameworks are necessary; the idea of not using one is not taken seriously. Indeed, not using frameworks can be scary. If I don’t use a framework, will my design be maintainable? Will my performance be good enough?

Like everything, it takes practice. I argue that it’s possible to have a very maintainable design and a rock solid performance without frameworks. When you learn to do it, you discover that it is not that difficult, and it does not requires you to write mountains of boilerplate code.

Once you know how to do stuff without frameworks, you can take an informed decision whether to use one or not. If you don’t know how, you don’t have a choice.

Frameworks are good for learning

Frameworks are a condensation of proven techniques and design solutions. You can learn a lot by learning frameworks. I, for one, learned a lot from Ruby on Rails. I think we should keep learning frameworks, just for the purpose of learning. When I was developing my first Mac application, back in 1990, I was struggling, as at the time I didn’t know any other Mac programmer. There was no Internet! Apple back then had an FTP server, that was maintained by an Apple employee in his spare time. So at some point I got hold of the MacApp framework; it was one of the first application frameworks to come out. It helped me in many ways; unfortunately, it increased the startup time of my app from 4 to 30 seconds. I was *so* pissed off by this added delay, but at the time, I thought I was better off keeping the framework. I remember I was constantly thinking “the authors of MacApp are very experienced Mac programmers, and they cristallized their knowledge in this framework. If only they had written a *book* instead! Then I could take the bits I need of their knowledge, and leave the ones I don’t need.”

Now I understand that writing an effective book is hard, expecially if your primary skill is programming and not book writing. I still think, though, that frameworks are good for learning techniques and design solutions.

Viewed in this lights, frameworks can be useful training wheels. For me, the goal is to be able to get rid of them and be able to do as good and even better. Once you know how to do that, then using frameworks can be a conscious choice.

Most frameworks are DB-centric

You can generally set up a barebones project much faster with a framework. It’s hard to beat Ruby on Rails on this; with `rails new` you create a working web app, and with generators you can quickly set up a CRUD REST system. Same goes with Spring Boot REST controllers.

Too bad that CRUD does not cut it. Organizing your app around your DB tables is not the way to design an app for a complex domain.

Rails and all the frameworks inspired by it, including Spring Boot, Symfony, Play, etc are all *DB-centric*. They assume that you will design your system primarily around an entity-relationship diagram. This leads to designing for data first, which leads to doing procedural programming. Let me stress this: DB-centric design leads to procedural programming, which is much different from object-oriented programming. Yes, there are “classes” and “objects” in Spring programs; however, “entities” in a Spring program are usually data structures without behaviour, while most behaviour is in service objects that have behaviour but no data. Which is exactly the opposite of object-oriented programming.

Like everything else, procedural programming has a place. It’s generally speaking easier to understand a procedural program than an object-oriented program. Even a bad procedural program is generally easier to understand and fix than a bad object-oriented program. However, procedural programming does not lead to a clean model of the domain, in the DDD sense.

Automating a complex domain in procedural code leads to complex procedural logic, with nested conditionals, and more often than not, with persistence code mixed in with business logic.

True object-oriented code, however, is good for automating complex domains, if you take care to keep persistence well separated from business logic. Moreover, true OO, free from persistence concerns, is good for TDD.

Talk is cheap

Talk is cheap. Show me the code.

Linus Torvalds

In fact, replacing what frameworks do for you may require less than 1000 lines of code. The things that are required of you are:

- knowing the fundamental technologies of the web: how HTTP works

- knowing some fundamental design patterns: the share-nothing architecture and MVC.

- knowing the fundamentals of databases: SQL and database design

- TDD

The way I do it, is by developing the application from scratch with TDD, focusing on delivering early an end-to-end scenario that is relevant to the customer. Perhaps a heavily simplified scenario, but still something that makes sense for the customer.

I cannot show you the code that went in projects done for paying customers. However, I have some code developed for trainings that I’m happy to share.

- My presentation “TDD da un capo all’altro” (TDD from end to end) contains, I think, enough snippets of code for a reader to reconstruct the app, showing how to TDD an app from absolute scratch.

- My training workshop Simple Design in Action contains successive stages of TDD-ing a web application, showing how to use embedded Jetty without Spring boot and persistence without ORM

- This revised version of the Video World project is the result of me and a few collegues removing several layers of frameworks from old ThoughtWorks training material. It shows how to do server-side rendering, with layouts.

- My version of the TodoMVC sample app shows a way to do frontend work without frameworks. I also have a video and a blog post about it.

The main thing is: these are not meant to be production code. They are versions, sometimes simplified, of stuff I did in production code. Even if I could share production code from real projects, it wouldn’t be wise to copy it in your own projects. Good software design is in how the code evolves; you cannot really see it in a snapshot of the code tree. What I suggest you to do is to try to TDD toy projects until you can do it without too much effort. You can steal some ideas from my training projects, but when you will be faced with real problems, you will have to find your own solutions.

When to use third-party code

Am I advocating that we write everything from scratch then?

This is a question worth answering. There is a world of third-party systems, libraries and frameworks out there: let’s classify along two axes, the first axis being how focused to a single task it is, versus how general-purpose.

The second axis is how easy or hard it is to reproduce the service it does to you; how easily you can do without it.

In the upper-left quadrant we have things that are focused on doing a single thing, but that are easy to reproduce in your own code. A good example would be the infamous left-pad function.

In the upper-right quadrant we have things that are focused, but difficult to reproduce. For instance, crypto libraries like openssl take a huge amount of skill and knowledge to write. Web servers such as Jetty also go up there. Yes, you can write a simple HTTP server in 10 lines of Java, but it’s not likely to be robust enough for production.

In the lower-right quadrant we have stuff that’s general purpose, yet difficult to reproduce in-house. Operating systems, programming language interpreter and compilers, DBMS. All system software goes there.

Now, what would go in the lower-left quadrant, where we find general-purpose things that are more or less easily reproduced in-house? If you have the skill, you can reproduce most services that application frameworks do for you without a lot of effort. Mind you, I’m not saying that I can easily rewrite Spring! I’m saying something very different: that I can reproduce with ease what Spring does for me.

- Routing http requests to the appropriate controllers: it can be done in-house, as simple as chain of IFs, or as sophisticated as a ~100 line router.

- Injecting dependencies in objects: I can very well instantiate objects explicitly and pass their dependencies in constructors. It does not take a rocket scientist.

- Automated CRUD “repositories”: thank you, I don’t need them. A real repository in the DDD sense is something that encapsulates custom persistence logic. I’m better off implementing it with a simple adapter over JDBC. Carlo Pescio suggests to use dbutils; I generally TDD my own.

Etc. etc. In this sense, Spring and similar frameworks are things that you can do without, if you want to.

Application servers such as JBoss too are general-purpose, also in the sense that they provide a set of different, not-that-much-cohesive services. Most of these services can be obtained in better ways:

- HTTP is best implemented with an embedded web server, as the popularity of Dropwizard and Spring Boot shows.

- Clustering as traditionally provided by application servers is best avoided. It is built on the assumption that web apps maintain expensive session state between one HTTP request and the other. In fact, only poorly designed web apps do that; well designed web apps are stateless or nearly stateless, and if this is the case, they are best served by non-clustered, independent servers. This, in fact, is the “share nothing” architecture.

- I argued in the past that distributed session management in Tomcat is broken. The same arguments is likely valid for most Java application servers.

For these reasons, I place JBoss and other JEE application servers in the lower-left quadrant: you can do very well without them.

focused on a single task

|

|

dbutils | Jetty

left-pad | openssl

easy to | difficult

do without -------------------+------------------- to do without

|

Spring | Linux JVM

JBoss | PostgreSQL

|

|

general purpose

Now what should be our strategy? Of course, everything on the right hand side should be bought and not done in-house. Unless the value of your system depends, let’s say, on advances in cryptography or DB technology that are not generally available, doing system software or crypto in-house is madness.

Stuff in the upper-left quadrant is a candidate for writing in-house. You can save some time by using dbutils over TDD-ing your own JDBC adapter. However, every dependency on an external library is a liability, as the left-pad incident shows.

Stuff in the lower-left quadrant is probably best avoided, if you have the skills. They have the highest impact on your design and reduce significantly your control over the fate of your system.

Other objections

You make the customer pay for reinventing the wheel

In the end, what really matters is to delivery quality software in reasonable time. I found that by avoiding stuff in the left half of the easy-focused diagram, teams are able to do so. If that involves some rewriting of common logic, so be it. It turns out that universal wheels such as Spring do not make me go faster.

By your reasoning, we shouldn’t use compilers or standard libraries

See the section above

You’ll write your own quirky framework and it will suck

No. I will not write my own framework. I write just the code that I need to solve this particular customer’s problem in this particular moment. I will spend zero time writing code with the expectation that it will be reused elsewhere, not even in other projects that I will do for this customer, not code that I expect to reuse in this same project next month.

I take YAGNI very seriously. I TDD the simplest code that solves the problem I have today. It’s counter-intuitive, but this makes me go faster. Every time I wrote code for tomorrow, I found I wasted my time.

This does not mean that I write the first thing that works and then move on. I tend to refactor quite a bit before I decide I’m finished with a bit of code. My code is generally very simple and clean as a result of quite a bit of effort. A side effect of this is that my code tends to be flexible and easy to extend.

I repeat: the simplest thing is often the result of sustained research and effort. It’s not the first thing and certainly not the easiest thing.

New developers will have a hard time understanding the codebase

That depends on how good my code is, isnt’t it? If you write simple code (see above answer), it shouldn’t be too hard to understand. On the other hand, even apps written with frameworks are not always that easy to understand.

Much wiser and skilled developers wrote the frameworks that you’re despising

Their circumstances may be different. Their programming habits and processes are probably quite a bit different from mine. I really don’t care how experienced, respected or influential framework authors may be; all I care about is the results I get. I evaluate the quality of a solution with respect to how well it helps me reach my goals.

Conclusions?

Engineering is about evaluating options. It seems to me that developers discard the “no-frameworks” option without giving it a lot of thought. In my experience, without frameworks, I was able to help team deliver high performance, high quality code in a short time.

Your circumstances may be different. Don’t trust me; think for yourself. Think critically about technologies and design solutions; and if you care about your craft, try to de-framework an app and see what happens!

No Frameworks, Part 1

Tuesday, September 19th, 2017Non dover perdere tempo a cercare su stackoverflow come configurare il framework ci ha fatto guadagnare un sacco di tempo #abd17 @xpmatteo

— Lorenzo Massacci (@lorenzomassacci) September 16, 2017

Twitter is not good for deep conversations

In a recent talk, I told the story of a very successful software project where I was the tech leader. In this project we built a web service + web application, in Java, without using any framework: no Spring, no Hibernate, no MVC framework, nothing like that. Contrary to prevalent opinion, we delivered quickly, faster than even I had believed.

And the code was reasonably clean and maintainable: five years after I left the project, many developers told me that they liked working on that product, and it was a formative experience.

At the conference, I quipped that “not having to spend time on Stackoverflow to solve framework configuration problems saved us a lot of time,” and that indeed was part of the reason why we delivered fast, but it is not the whole story. Common reactions to #noframeworks include:

- You make the customer pay for reinventing the wheel

- By your reasoning, we shouldn’t use compilers or standard libraries

- You’ll write your own quirky framework

- Developers will have a hard time understanding the codebase

All of the above objections are wrong, if you do things the way I recommend, which is, by doing Extreme Programming for real. Doing XP for real, for me, includes focusing and investing on simplicity, in a way that most developers are not usually willing to do.

Applying lean thinking to software development

Lean thinking says that we should optimize the end-to-end value stream, from when a customer need is identified, to when that need is solved; if that need is to be solved with software, the value is obtained when we deliver working software in a production environment.

What should we optimize? Obviously, for cost and time. However, since costs in software development are dominated by personnel salaries, the cost is dominated by the number of person/days spent to obtain value: hence, we should optimize for delivery time.

Once you know what to optimize for, lean thinking suggests that you observe the software delivery process, looking for waste, and act to remove that waste.

Terminology: In Lean, an activity is classified as “value adding” if it directly contributes to building the value that customers want; it is “waste” if it does not. For instance, if you’re building a chair, cutting and shaping the wood are value-adding activities; moving the wood from the warehouse to the worktable is waste. Sometimes it’s difficult to decide if an activity is waste or not. In this case, a simple thought experiment can help: if we did the activity more and more, would the customer be happier? Or not?

[Waste is further classified in “necessary waste”, something that if we don’t do, we will end up making the customer pay more, and “pure waste”.]

In the world of software development, writing the lines of code that will end up as part of the solution is certainly value-adding. On the other hand, a standup meeting is waste: it does not directly contribute to the delivery of software. If we imagine the standup meeting being extended to two hours or a full day, we clearly see that it would not make the customer any happier :) The standup meeting is probably *necessary waste*, because if we don’t do it, we reduce communication within the team and we end up creating more waste elsewhere. However, it is still waste, and we should try to keep it short.

There is a world of waste in building the wrong product, or the wrong features: you should certainly look in the disciplines of Lean Startup and service design, to make sure you are solving the right problem. I will not talk about these because they’re well covered elsewhere.

The kinds of waste that I want to talk about are the things that take away the best time from developers. Picture this: the developer comes to work; perhaps he’s 20 minutes late because of traffic. He comes in, settles down, checks email, has coffee with his collegues, he chats a little. Nothing wrong with this. Then he has a standup meeting, and perhaps other meetings for discussing planning or technical choices. All of this is still acceptable; they are not value-adding activities, but often they are necessary. Then comes the magic moment when the developers are in front of the keyboard, with their code open in the editor or IDE, and they are ready to do the value-adding activities that we care about. And they write a line of code, which requires, say, one minute, and they need to check if it works; so they start the application, and they wait; because the application takes one or two minutes to compile and start up. They spend another minute logging in the application and clicking through to get to the screen they are working on, and they confirm that their line of code works (or not.) Then they write another line or two of code, (time required: one minute) and again they need to check if they work, so again they waste three minutes restarting the app and testing it manually.

They could try to save time by batching changes: write 10 or 100 lines of code, and then test if they work. Alas, this does not usually save time, as the number of mistakes increases, and the mistakes interact with each other, and the end result is that debugging 10 or 100 lines of code takes significantly longer than debugging 1 or 2.

The point I’m trying to make is that the compile-link-start-login-navigate-to-the-right-screen cycle eats minutes from the best time that developers have: the time when they are sitting at their workstation, ready to write the lines of code that they know they have to write, having completed all the meeting obligations and coffee breaks and all other necessary wastes.

When you start looking at the work of developers in this way, you start to see the value of dynamic languages, where building the app is istantaneous, and the time saved in this way may trump the supposed gains obtained by static typing. You also start looking at the long compile times of C++ or Scala, and you see that those languages were not designed with the need of fast software delivery in mind.

You also start to see other wasteful activities. When a framework does not do what you want, there is often not a lot of reasoning you can do. The framework is too complex for you to debug it; the documentation is too vast, or too incomplete, and you know from experience that looking there is not likely to provide a solution quickly. So you go to Stackoverflow and hope that someone else has solved your problem. Then you try a possible solution, and if it does not work, you try another. All of this is, of course, pure waste, borne from the fact that you are using complex frameworks that may behave in unforeseen ways.

If, on the other hand, you have a problem with a simple app that does not use a framework, you can most likely solve it by reading the code, or stepping through the code in the debugger. All of the code of the app is your own code, and you can step through it, with no jumps into framework code, or AOP-generated code like that, for instance, Spring or Hibernate put into your app and that make debugging much more difficult. The solution to your problem is in your own code, not on Stackoverflow, and the size of your own code is many orders of magnitude less than the code in frameworks.

Getting back to the length of the compile-and-startup cycle, we see that frameworks do not help: Spring apps, for instance, take minutes to start, because they search the classpath for configuration classes. On the other hand, a no-framework Java web app with an embedded Jetty starts in under a second, and when you debug it in the IDE it has nearly-instant reload of changed code. No need to install JRebel. You don’t have to believe me; just try.

Other framework-related wastes include:

- The time required to learn it.

- The time required to upgrade your app when a new version comes up (this is work that the framework developer inflict on you, without your consent)

- The time required to upgrade an old app that was using an outdated version of the framework (this is expecially bad when vulnerabilities are discovered in an old framework, forcing the customer to pay for upgrading a framework that is old and by this time poorly supported. Pure waste, as there is no business reason to change the app)

- Time required to argue on the relative merits of framework A vs framework B, which leads to the mother of all framework-related wastes, the Meeting To Decide Which Frameworks To Use :)

Now this is all about the wastes of frameworks, and we haven’t even started to look into the risks generated by them. In the next part, I will talk about how the benefits of frameworks, and how you can get the same benefits without using them.

Smalltalk-Inspired, Frameworkless, TDDed Todo-MVC

Tuesday, August 16th, 2016UPDATES

TL;DR

I wrote an alternative to the Vanilla-JS example of todomvc.com. I show that you can drive the design from the tests, that you don’t need external libraries to write OO code in JS, and that simple is better :)

Why yet another Todo App?

A very interesting website is todomvc.com. Like the venerable www.csszengarden.com shows many different ways to render the same page with CSS, todomvc.com instead shows how to write the same application with different JavaScript frameworks, so that you can compare the strengths and weakness of various frameworks and styles.

Given the amount of variety and churn of JS frameworks, it is very good that you can see a small-sized complete example: not too big that it takes ages to understand, but not so small to seem trivial.

Any comparison, though, needs a frame of reference. A good one in this case would be writing the app with no frameworks at all. After all, if you can do a good job without frameworks, why incur the many costs of ownership of frameworks? So I looked at the vanillajs example provided, and found it lacking. My main gripe is that there is no clear “model” in this code. If this were real MVC, I would expect to find a TodoList that holds a collection of TodoItems; this sort of things. Alas, the only “model” provided in that example has the unfortunate name of “Model” and is not a model at all; it’s a collection of procedures that read and write from browser storage. So it’s not really a model because a real “model” should be a Platonic, infrastructure-free implementation of business logic.

There are other shortcomings to that implementation, including that the “view” has a “render” method that accepts the name of an operation to perform, making it way more procedural than I would like. This is so different to what I think of as MVC that made me want to try my hand at doing it better.

Caveats: I’m not a good JS programmer. I don’t know the language well, and I’m sure my code is clumsier than it could be. But I’m also sure that writing a frameworkless app is not a sign of clumsiness, ignorance or old age. Anybody can learn Angular, React or what have you. Learning frameworks is not difficult. What is difficult is to write good code, with or without frameworks. Learning to write good code without frameworks gives you incredible leverage: gone are the hours spent looking on StackOverflow for the magic incantations needed to make framework X do Y. Gone is the cost of maintenance inflicted on you by the framework developers, when they gingerly update the framework from version 3 to version 4. Gone is the tedium of downloading megabytes of compressed code from the server!

So what were my goals?

- Simple design. This means: no frameworks! Really, frameworks are sad. Just write the code that your app needs, and write it well.

- TDD: let the tests drive the design. I try to write tests that talk the language of the app specification, avoiding implementation details as much as possible

- Smalltalk-inspired object orientation. JS generally pushes you to expose the state of objects as public properties. In Smalltalk, the internal state of an object is totally encapsulated. I emulated that with a simple trick that does not require extra libraries.

- I had in the back of my mind the “count” example in Jill Nicola’s and Peter Coad’s OOP book. That is what I think of when I say “MVC”. I tried to avoid specifying this design directly in the tests, though.

- Simple, readable code. You wil be the judge on that.

How did it go?

The first time around I tried to work in a “presenter-first” style. After a while, I gave up and started again from scratch. The code was ugly, and I felt that I was committing the classic TDD mistake, to force my preconceived design. So I started again and the second time was much nicer.

You cannot understand a software design process just by looking at the final result. It’s only by observing how the design evolved that you can see how the designer thinks. When I started again from scratch, my first tests looked like this:

beforeEach(function() {

fixture = document.createElement('div');

$ = function(selector) { return fixture.querySelector(selector); }

})

describe('an empty todo list', function() {

it('returns an empty html list', function() {

expect(new TodoListView([]).render()).to.equal('<ul class="todo-list"></ul>');

});

});

describe('a list of one element', function() {

it('renders as html', function() {

fixture.innerHTML = new TodoListView(['Pippo']).render();

expect($('ul.todo-list li label').textContent).equal('Pippo');

expect($('ul.todo-list input.edit').value).equal('Pippo');

});

});

The above tests are not particularly nice, but they are very concrete: the check that the view returns the expected HTML, with very few assumptions on the design. Note that the “model” in the beginning was just an array of strings.

The final version of those test does not change much on the surface, but the logic is different:

beforeEach(function() {

fixture = createFakeDocument('<ul class="todo-list"></ul>');

todoList = new TodoList();

view = new TodoListView(todoList, fixture);

})

it('renders an empty todo list', function() {

view.render();

expect($('ul.todo-list').children.length).to.equal(0);

});

it('renders a list of one element', function() {

todoList.push(aTodoItem('Pippo'));

view.render();

expect($('li label').textContent).equal('Pippo');

expect($('input.edit').value).equal('Pippo');

});

The better solution, for me, was to pass the document to the view object, call its render() method, and check how the document was changed as a result. This places almost no constraints on how the view should do its work. This, to me, was key to letting the test drive the design. I was free to change and simplify my production code, as long as the correct code was being produced.

Of course, not all the tests check the DOM. We have many tests that check the model logic directly, such as

it('can contain one element', function() {

todoList.push('pippo');

expect(todoList.length).equal(1);

expect(todoList.at(0).text()).equal('pippo');

});

Out of a total of 585 test LOCs, we have 32% dedicated to testing the models, 7% for testing repositories, 4% testing event utilities and 57% for testing the “view” objects.

How long did it take me?

I did not keep a scrupolous count of pomodoros, but since I committed very often I can estimate the time taken from my activity on Git. Assuming that every stretch of commits starts with about 15 minutes of work before the first commit in the stretch, it took me about 18 and a half hours of work to complete the second version, distributed over 7 days (see my calculations in this spreadsheet.) The first version, the one I discarded, took me about 6 and a half hours, over two days. That makes it 25 hours of total work.

What does it look like?

The initialization code is in index.html:

<script src="js/app.js"></script> <script> var repository = new TodoMvcRepository(localStorage); var todoList = repository.restore(); new TodoListView(todoList, document).render(); new FooterView(todoList, document).render(); new NewTodoView(todoList, document).render(); new FilterByStatusView(todoList, document).render(); new ClearCompletedView(todoList, document).render(); new ToggleAllView(todoList, document).render(); new FragmentRepository(localStorage, document).restore(); todoList.subscribe(repository); </script>

I like it. It creates a bunch of objects, and starts them. The very first action is to create a repository, and ask it to retrieve a TodoList model from browser storage. The FragmentRepository should perhaps better named FilterRepository. The todoList.subscribe(repository) makes the repository subscribe to the changes in the todoList model. This is how the model is saved whenever there’s a change.

Each of the “view” objects takes the model and the DOM document as parameters. As you will see, these “views” also perform the function of controllers. This is how they came out of the TDD process. They probably don’t conform exactly to MVC, but who cares, as long as they are small, understandable and testable?

Each of the “views” handles a particular UI detail: for instance, the ClearCompletedView is in js/app.js:

function ClearCompletedView(todoList, document) {

todoList.subscribe(this);

this.notify = function() {

this.render();

}

this.render = function() {

var button = document.querySelector('.clear-completed');

button.style.display = (todoList.containsCompletedItems()) ? 'block' : 'none';

button.onclick = function() {

todoList.clearCompleted();

}

}

}

The above view subscribes itself to the todoList model, so that it can update the visibility of the button whenever the todoList changes, as the notify method will then be called.

The test code is in the test folder. For instance, the test for the ClearCompletedView above is:

describe('the view for the clear complete button', function() {

var todoList, fakeDocument, view;

beforeEach(function() {

todoList = new TodoList();

todoList.push('x', 'y', 'z');

fakeDocument = createFakeDocument('<button class="clear-completed">Clear completed</button>');

view = new ClearCompletedView(todoList, fakeDocument);

})

it('does not appear when there are no completed', function() {

view.render();

expectHidden($('.clear-completed'));

});

it('appears when there are any completed', function() {

todoList.at(0).complete(true);

view.render();

expectVisible($('.clear-completed'));

});

it('reconsider status whenever the list changes', function() {

todoList.at(1).complete(true);

expectVisible($('.clear-completed'));

});

it('clears completed', function() {

todoList.at(0).complete(true);

$('.clear-completed').onclick();

expect(todoList.length).equal(2);

});

function $(selector) { return fakeDocument.querySelector(selector); }

});

Things to note:

- I use a real model here, not a fake. This gives me confidence that the view and the model work correctly together, and allows me to drive the development of the

containsCompletedItems()method inTodoList. However, it does couple the view and the model tightly. - I use a simplified “document” here, that only contains the fragment of index.html that this view is concerned about. However, I’m testing with the real DOM in a real browser, using Karma. This gives me confidence that the view will interact correctly with the real browser DOM. The only downside is that the view knows about the “clear-completed” class name.

- The click on the button is simulated by invoking the onclick handler.

If you are curious, here is the implementation of createFakeDocument:

function createFakeDocument(html) {

var fakeDocument = document.createElement('div');

fakeDocument.innerHTML = html;

return fakeDocument;

}

It’s that simple to test JS objects against the real DOM.

All the production code is in file js/app.js. An example model is TodoItem:

function TodoItem(text, observer) {

var complete = false;

this.text = function() {

return text;

}

this.isCompleted = function() {

return complete;

}

this.complete = function(isComplete) {

complete = isComplete;

if (observer) observer.notify()

}

this.rename = function(newText) {

if (text == newText)

return;

text = newText.trim();

if (observer) observer.notify()

}

}

As you can see, I used a very simple style of object-orientation. I do not use (or need here) prototype inheritance, but I do encapsulate object state well.

I’m not showing the TodoList model because it’s too long :(. I don’t like this, but I don’t have a good idea at this moment to make it smaller. Another class that’s too long and complex is TodoListView, with about 80 lines of code. I could probably break it down in TodoListView and TodoItemView, making it a composite view with a smaller view for each TodoItem. That would require creating and destroying the view dynamically. I don’t know if that would be a good idea; I haven’t tried it yet.

Comparison with other Todo-MVC examples

How does it compare to the other examples? There is no way I can read all of the examples, let alone understand them. However, there is a simple metric that I can use to compare my outcome: simple LOC, counting just the executable lines and omitting comments and blank lines. After all, if you use a framework, I expect you to write less code; otherwise, it seems to me that either the framework is not valuable, or that you can’t use it well, which means that it’s not valuable to you. This is the table of LOCs, computed with Cloc. (Caveat: I tried to exclude all framework and library code, but I’m not sure I did that correctly for all examples.) My version is the one labelled “vanillajs/xpmatteo” in bold. I’m excluding test code.

| 1204 | typescript-angular/js |

| 1185 | ariatemplates/js |

| 793 | aurelia |

| 790 | socketstream |

| 782 | typescript-react/js |

| 643 | gwt/src |

| 631 | closure/js |

| 597 | dojo/js |

| 594 | puremvc/js |

| 564 | vanillajs/js |

| 529 | dijon/js |

| 508 | enyo_backbone/js |

| 489 | typescript-backbone/js |

| 481 | vanilla-es6/src |

| 479 | flight/app |

| 475 | lavaca_require/js |

| 468 | componentjs/app |

| 432 | duel/src/main |

| 383 | polymer/elements |

| 364 | cujo/app |

| 346 | sapui5/js |

| 321 | vanillajs/xpmatteo |

| 317 | scalajs-react/src/main/scala |

| 311 | backbone_marionette/js |

| 310 | ampersand/js |

| 295 | sammyjs/js |

| 295 | backbone_require/js |

| 287 | extjs_deftjs/js |

| 284 | durandal/js |

| 280 | rappidjs/app |

| 276 | thorax/js |

| 271 | troopjs_require/js |

| 265 | angular2/app |

| 256 | angularjs/js |

| 249 | mithril/js |

| 242 | thorax_lumbar/src |

| 235 | chaplin-brunch/app |

| 233 | vanilladart/web/dart |

| 233 | somajs_require/js |

| 232 | serenadejs/js |

| 226 | emberjs/todomvc/app |

| 224 | spine/js |

| 224 | exoskeleton/js |

| 214 | backbone/js |

| 213 | meteor |

| 207 | angular-dart/web |

| 190 | somajs/js |

| 167 | riotjs/js |

| 164 | react-alt/js |

| 156 | angularjs_require/js |

| 147 | ractive/js |

| 146 | olives/js |

| 146 | knockoutjs_require/js |

| 145 | canjs_require/js |

| 139 | atmajs/js |

| 132 | firebase-angular/js |

| 130 | foam/js |

| 129 | canjs/js |

| 124 | vue/js |

| 99 | knockback/js |

| 98 | react/js |

| 96 | angularjs-perf/js |

| 34 | react-backbone/js |

Things I learned

It’s been fun and I learned a lot about JS and TDD. Many framework-based solutions are shorter than mine, and that’s to be expected. However, all you need to know to understand my code is JS.

TDD works best when you try to avoid pushing it to produce your preconceived design ideas. It’s much better when you follow the process: write tests that express business requirements, write the simplest code to make the tests pass, refactor to remove duplication.

Working in JS is fun; however, not all things can be tested nicely with the approach I used here. I often checked in the browser that the features I had test-driven were really working. Sometimes they didn’t, because I had forgot to change the “main” code in index.html to use the new feature. At one point I had an unwanted interaction between two event handlers: the handler for the onchange event fired when the edit text was changed by the onkeyup handler. I wasn’t able to write a good test for this, so I resorted to simply testing that the onkeyup handler removed the onchange handler before acting on the text. (This is not very good because it tests the implementation instead of the outcome.)

You can do a lot of work without jQuery, expecially since there is the querySelector API. However, in real work I would probably still use it, to improve cross-browser compatibility. It would probably also make my code simpler.

Pattern: Testable Screens

Tuesday, March 29th, 2016When you are developing a complex application, be it web, mobile or whatever, it’s useful to be able to launch any screen immediately and independently from the rest of the system. By “screen” I mean a web page, an Android activity, a Swing component, or whatever it is called in the UI technology that you are using. For instance, in an ecommerce application, I would like to be able to immediately show the “thank you for your purchase” page, without going through logging in, adding an item to the cart and paying.

The benefits of this simple idea are many:

- You can easily demo user stories that are related to that screen

- You can quickly test UI changes

- You can debug things related to that page

- You can spike variations

- The design of the screen is cleaner and less expensive to maintain.

Unfortunately, teams are often not able to do this, because screens are tightly coupled to the rest of the application. For instance, in Javascript single-page applications, it would be good to be able to launch a view without having to start a server. Often this is not possible, because the view is tightly coupled to the Ajax code that gets the data from the server, that the view needs to function.

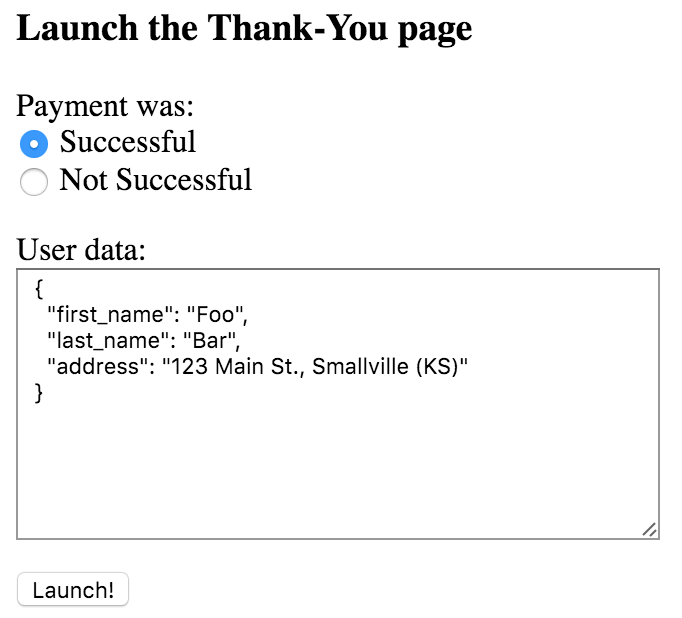

The way out of this problem is to decouple the screen from its data sources. In a web application, I would launch a screen by going to a debug page that allows me to set up some test data, and then launch the page. For instance:

Note that the form starts pre-populated with default data, so that I can launch the desired screen with a single click.

Making screens decoupled from their data sources does, in my opinion, generally improve the design of the application. Making things more testable has a general positive impact on quality.

Bureaucratic tests

Monday, March 28th, 2016The TDD cycle should be fast! We should be able to repeat the red-green-refactor cycle every few minutes. This means that we should work in very small steps. Kent Beck in fact is always talking about “baby steps.” So we should learn how to make progress towards our goal in very small steps, each one taking us a little bit further. Great! How do we do that?

Example 1: Testing that “it’s an object”

In the quest for “small steps”, I sometimes see recommendations that we write things like these:

it("should be an object", function() {

assertThat(typeof chat.userController === 'object')

});

which, of course, we can pass by writing

chat.userController = {}

What is the next “baby step”?

it("should be a function", function() {

assertThat(typeof chat.userController.login === 'function')

});

And, again, it’s very easy to make this pass.

chat.userController = { login: function() {} }

I think these are not the right kind of “baby steps”. These tests give us very little value.

Where is the value in a test? In my view, a test gives you two kinds of value:

- Verification value, where I get assurance that the code does what I expect. This is the tester’s perspective.

- Design feedback, where I get information on the quality of my design. And this is the programmers’s perspective.

I think that in the previous two tests, we didn’t get any verification value, as all we were checking is the behaviour of the typeof operator. And we didn’t get any design feedback either. We checked that we have an object with a method; this does not mean much, because any problem can be solved with objects and methods. It’s a bit like judging a book by checking that it contains written words. What matters is what the words mean. In the case of software, what matters is what the objects do.

Example 2: Testing UI structure

Another example: there are tutorials that suggest that we test an Android’s app UI with tests like this one:

public void testMessageGravity() throws Exception {

TextView myMessage =

(TextView) getActivity().findViewById(R.id.myMessage);

assertEquals(Gravity.CENTER, myMessage.getGravity());

}

Which, of course, can be made to pass by adding one line to a UI XML file:

<TextView

android:id="@+id/myMessage"

android:gravity="center"

/>

What have we learned from this test? Not much, I’m afraid.

Example 3: Testing a listener

This last example is sometimes seen in GUI/MVC code. We are developing a screen of some sort, and we try to make progress towards the goal of “when I click this button, something interesting happens.” So we write something like this:

@Test

public void buttonShouldBeConnectedToAction() {

assertEquals(button.getActionListeners().length, 1);

assertTrue(button.getActionListeners()[0]

instanceof ActionThatDoesSomething);

}

Once again, this test does not give us much value.

Bureaucracy

The above tests are all examples of what Keith Braithwaithe calls “pseudo-TDD”:

- Think of a solution

- Imagine a bunch of classes and functions that you just know you’ll need to implement (1)

- Write some tests that assert the existence of (2)

- [… go read Keith’s article for the rest of his thoughts on the subject.]

In all of the above examples, we start by thinking of a line of production code that we want to write. Then we write a test that asserts that that line of code exists. This test does nothing but give us permission to write that line of code: it’s just bureaucracy!

Then we write the line of code, and the test passes. What have we accomplished? A false sense of progress; a false sense of “doing the right thing”. In the end, all we did was wasting time.

Sometimes I hear developers claim that they took longer to finish, because they had to write the tests. To me, this is nonsense: I write tests to go faster, not slower. Writing useless tests slows me down. If I feel that testing makes me slower, I should probably reconsider how I write those tests: I’m probably writing bureaucratic tests.

Valuable tests

Bureaucratic tests are about testing a bit of solution (that is, a bit of the implementation of a solution). Valuable test are about solving a little bit of the problem. Bureaucratic tests are usually testing structure; valuable tests are always about testing behaviour. The right way to do baby steps is to break down the problem in small bits (not the solution). If you want to do useful baby steps, start by writing a list of all the tests that you think you will need.

In Test-Driven Development: by Example, Kent Beck attacks the problem of implementing multi-currency money starting with this to-do list:

$5 + 10 CHF = $10 if rate is 2:1

$5 * 2 = $10

Note that these tests are nothing but small slices of the problem. In the course of developing the solution, many more tests are added to the list.

Now you are probably wonder what would I do, instead of the bureaucratic tests that I presented above. In each case, I would start with a simple example of what the software should do. What are the responsibilities of the userController? Start there. For instance:

it("logs in an existing user", function() {

var user = { nickname: "pippo", password: "s3cr3t" }

chat.userController.addUser user

expect(chat.userController.login("pippo", "s3cr3t")).toBe(user)

});

In the case of the Android UI, I would probably test it by looking at it; the looks of the UI have no behaviour that I can test with logic. My test passes when the UI “looks OK”, and that I can only test by looking at it (see also Robert Martin’s opinion on when not to TDD). I suppose that some of it can be automated with snapshot testing, which is a variant of the “golden master” technique.

In the case of the GUI button listener, I would not test it directly. I would probably write an end-to-end test that proves that when I click the button, something interesting happens. I would probably also have more focused tests on the behaviour that is being invoked by the listener.

Conclusions

Breaking down a problem into baby steps means that we break in very small pieces the problem to solve, not the solution. Our tests should always speak about bits of the problem; that is, about things that the customer actually asked for. Sometimes we need to start by solving an arbitrarily simplified version of the original problem, like Kent Beck and Bill Wake do in this article I found enlightening; but it’s always about testing the problem, not the solution!

OOP is underrated

Monday, March 21st, 2016I came recently upon a thread where Object-Oriented Programming was being questioned because of excessive complexity, ceremony, layers,… and because of the insistence of OOP of treating everything as an object, which some feel runs counter to most people’s intuition. Similar threads keep appearing, where OOP is being questioned and other approaches, like functional programming, are seen as a cure for the OOP “problem”.

My answer touches upon many points, and I wanted to share it with you.

Encapsulation is a key thing in OOP, and it’s just part of the larger context. Abstract Data Types also do encapsulation. OOP is more than that; the key idea is that OOP enables the building of a model of the problem you want to solve; and that model is reasoned about with the spatial, verbal and operational reasoning modes that we all use to solve everyday problems. In this sense OOP culture is strongly different from the ADT and formal math culture.

Math is very powerful. It enables to solve problems that by intuition alone you wouldn’t be able to solve easily. A good mathematical model can make simple what seems to be very complex. Think how Fourier transforms make it easy to reason about signals. Think how a little mathematical reasoning makes it easy to solve the Mutilated Chessboard problem. In fact, a good mathematical model can reduce the essential complexity of a problem. (You read right — reducing the essential complexity. I think that we are never sure what the essential complexity of a problem really is. There might always be another angle or an insight to be had that would make it simpler than what we thought it was. Think a parallel with Kolmogorov complexity: you never know what the K complexity of a string really is.)

However, mathematical reasoning is difficult and rare. If you can use it, then more power to you! My feeling is that many recent converts to FP fail to see the extent of the power of mathematical models and limit themselves to using FP as a fancy procedural language. But I digress.

My point is that if you want to reach the point of agile maturity where programming is no longer the bottleneck, and we deliver when the market is ready, not when we finally manage to finish coding (two stars on the Shore/Larsen model), building the right model of the problem is an essential ingredient. You should build a model that is captured as directly as possible in code. If you have a mathematical model, it’s probably a good fit for a functional programming language. However, we don’t always have a good mathematical model of our problems.

For many problems, we can more readily find spatial/verbal/operational intuitive models. When we describe an OO model with phrases like “This guy talks to that guy”, that is the sort of description that made Dijkstra fume with disdain! Yet this way of reasoning is simple, immediate and useful. Some kind of problems readily adapt themselves to be modeled this way. You may think of it as programming a simulation of the problem. It leverages a part of our brain that (unlike mathematical reasoning) we all use all the time. These operational models, while arguably less powerful than mathematical models, are easier reason about and to communicate.

Coming to the perception of the excessive “ceremony” and “layers” of OOP, I have two points:

- Most “OOP” that we see is not OOP at all. Most programs are conceived starting with the data schema. That’s the opposite of OOP! If you want to do OOP, you start with the behaviour that is visible from the outside of your system, not with the data that lie within it. It’s too bad that most programming culture is so deeply data-oriented that we don’t even realise this. It’s too bad that a lot of framework and tooling imply and push towards data centric: think JPA and Rails-style ActiveRecord, where you design your “object” model as a tightly-coupled copy of a data model.

- Are we practicing XP? When we practice XP, we introduce stuff gradually as we need. An abstraction is introduced when there is a concrete need. A layer is introduced when it is shown to simplify the existing program. When we introduce layers and framework upfront (anybody here do a “framework selection meeting” before starting coding? :-) ) we usually end up with extra complexity. But that’s nothing new: XP has been teaching us to avoid upfront design for a long time.

For more on how OOP thinking differs from formalist thinking, see Object Thinking by David West. I also did a presentation on this subject (video in Italian).

The time that really matters

Thursday, January 14th, 2016TL;DR: write apps that start from the command line within one second.

One of the core principles of Lean is waste reduction. There are many kinds of waste: for instance, overproduction means to write software that is not needed, or that is “gold-plated”, that is, done to a level of completeness that exceed the customer’s needs.

Here I would like to talk about another kind of waste: waiting.

Picture this: you are a programmer. You arrived at work this morning. You attended the stand-up meeting. You attended an ad-hoc technical meeting. You had a coffee break with your collegues. You pick up a user story from the card wall and you discuss its details with the product owner. You finally sit down in front of the keyboard, pairing with a fellow programmer. Now your core time starts: the time when you program; the time when are really doing value-adding work. You start by checking the screen of the application where you will have to add functionality: so you start the application…. and wait. And wait. And wait.

Your application may be able to run thousands of transactions per second, yet it takes minutes to boot.

The time that your application takes to boot is a tax that you keep paying, tens or hundreds of times per day. This waiting time cuts into your best time: the time when you are in front of the keyboard, well-rested, ready to do your best work. This tax is being paid by all the programmers in the team, by all the testers and by all those who need to redeploy the application.

I hear you saying: “But, but, but… I do TDD! I don’t need to boot my app that often!”. OK, it’s very good that you do TDD. If you do it well, which means that you will mostly do microtests instead of integrated tests, then you will not have to reboot your application all the time. And yet… there are times when we really need to reboot the app. We will have to write at least some integrated tests. Sometimes we will have to debug. Sometimes we will have to test the thing manually. Sometimes we are tweaking the UI, and it makes little sense to write tests for that. Sometimes, despite our best intentions, we don’t find a way to do TDD well. For all of these reasons, it really pays to have an application that can be started within one second.

My favourite way to implement a web application in Java is to use an embedded Jetty server. This is a technique that I’ve been teaching for years. You may see an example in the github repository for my Simple Design workshop. Running the program is simply a matter of executing

./gradlew compileJava && script/run.sh

which takes about a second. If you run it from Eclipse in debug mode, it reloads automatically any change you make.

Compare this to a program written with Spring Boot. Let’s consider the Getting Started example application. You compile and run it with

./gradlew build && java -jar build/libs/gs-spring-boot-0.1.0.jar

and it will run in 6.8 seconds (measured by hand with a stopwatch on my 8-cores Mid-2015 MacBook Pro). This is significantly worse than 1 second, but still tolerable. The problem is that by design, Spring looks for components in its classpath. This autoconfiguration takes an increasing amount of time as the application grows in size. Real-world services written with Spring boot take 20-30 seconds to boot. That’s definitely too much in my view… expecially when it’s easy to stay under one second.

That’s whay I don’t like to autoconfigure stuff: I just configure your components explicitly in my main partition.

Protect your core time: make it so that your app can be restarted within one second. That’s a real productivity gain for the whole team.

References

Uncle Bob blogged about this very subject in The Mode B imperative

Greg Young describes how and why to configure components explicitly in his talk 8 Lines of Code

The Semaphores Kata

Tuesday, December 23rd, 2014This is an exercise to explore how TDD relates to graphical user interfaces. And also how to work with time. And how to obtain complex behaviour by composition of simpler behaviour.

It is inspired by an exercise presented in the book ATDD By Example by Markus Gärtner.

First step

We want an app that shows a working semaphore, with the three usual lights red, green and amber. The semaphore works with the following cycle:

- Initially only the red light is on.

- After 60 seconds, the red light goes off, the green light is turned on.

- After 30 seconds, the amber light is turned on.

- After 10 seconds, both amber and green go off, and red is turned on.

- And again and again…

This can be done in Java with a Swing user interface, or in Javascript with an HTML user interface.

Demo: you should show the GUI with the lights turning on and off. You may speed up the tempo just to make the demo less boring :-)

Second step

We must now handle a crossing with four semaphores, like this:

o

o (B0)

o

o o

o (A0) o (A1)

o o

o

o (B1)

o

We have four semaphores A0, A1, B0, B1. A0 and A1 must always show the same lights. B0 and B1 must always show the same lights. B0’s cycle is delayed by 50 seconds with respect to A0. As a consequence, there should NEVER be a green light on all four semaphores! And there should be a safety 10 seconds interval when all four semaphores show red. The following diagram shows what the semaphores should show.

Every letter represents 10 seconds

time: ----------->

A0 and A1: RRRRRRGGGARRRRRRGGGA

B0 and B1: RGGGARRRRRRGGGARRRRR

R = Red light

G = Green light

A = Green + Amber light

For the instructor

How to test a GUI? (Hint: you don’t; you apply model-view separation and move all of the logic to the model. You should read the “Humble Dialog Box” paper.) There should be a “Semaphore” domain object.

How to test the passing of time? (Hint: the most productive way is to assume that the app will receive a “tick” message every second. This is also an instance of model-view separation; the “tick” message is sent by a clock. This is just the same as if there was a user clicking on a button that advances the simulation by one second.)

How do participants demo the application? Insist on seeing the application work for real. A demo that consists of showing unit tests passing is NOT satisfactory. Try to make developers use both unit tests and manual tests. Insist on concrete, demoable progress.

The goal of the second step is to check that the developers use two (or four) instances of the Semaphore object from the first step, instead of making a big, monolithic “two-way semaphore” that controls all of the lights.

Mathematics cannot prove the absence of bugs

Thursday, December 18th, 2014Everyone is familiar with Edsger W. Dijkstra’s famous observation that “Program testing can be used to show the presence of bugs, but never to show their absence!” It seems to me that mathematics cannot prove the absence of bugs either.

Consider this simple line of code, that appears in some form in *every* business application:

database.server.address=10.1.2.3

This is a line of software. It’s an assignment of a value to a variable. And there is no mathematical way to prove that this line is correct. This line is correct only IF the given IP address is really the address of the database server that we require.

Not even TDD and unit testing can help to prove that it’s correct. What would a unit test look like?

assertEquals("10.1.2.3",

config.getProperty("database.server.address"));

This test is just repeating the contents of the configuration file. It will pass even if the address 10.1.2.3 is wrong.

So what is the *only* way to prove that this line of code is correct? You guessed it. You must run the application and see if it works. This test can be manual or automated, but still we need a *test* of the live system to make sure that that line of code is correct.